Queues

A queue is a processor of jobs that controls the number of job instances that can be running at a time and the delay between runs. Through queue configuration, you can use server resources more efficiently.

You can create a queue inside a job (local queue) or define a queue as a standalone object (global queue). A local queue processes only the instances of the job in which the local queue has been configured. A global queue can process instances of one job and instances of different jobs.

Global queues provide a flexible mechanism to manage a server load on a single FlowForce machine as well as on a cluster (see below).

Local and global queues in a cluster environment (Advanced Edition)

Setting up a cluster means that processing is distributed among cluster members: one master machine and one or more worker machines. For a global queue, you can select a cluster member to run on, which can be the master or any worker, only the master, or only a worker. With local queues, jobs can run only on the master machine and not on any other cluster member.

Security considerations

Queues utilize the same security access mechanism as any other FlowForce Server configuration objects. Namely, a user must have the Define execution queues privilege in order to create queues (see also Define Users and Roles). In addition, users can view queues and assign jobs to queues if they have appropriate container permissions (see also How Permissions Work). By default, any authenticated user gets the Queue - Use permission, which means they can assign jobs to queues.

To restrict access to queues, navigate to the container where the queue is defined and change the permission of the container to Queue - No access for the role authenticated. Next, assign the permission Queue - Use to any roles or users that you need. For more information, see Restrict Access to the /public Container.

For information about distributed processing, see Cluster. For information about creating local and standalone queues, see the subsections below.

Local queues

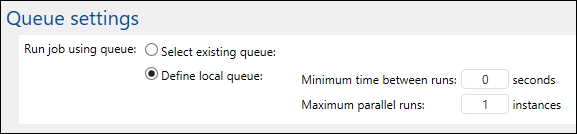

To configure a local queue, select the Define local queue option from the job configuration page and specify your queue preferences. The image below illustrates the default queue settings. For details about the Minimum time between runs and Maximum parallel runs properties, see Queue Settings below.

Global queues

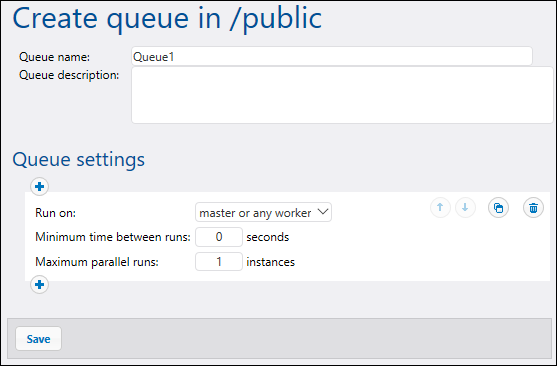

To create a queue as a standalone object, take the steps below:

1.Open the Configuration page and navigate to the container where you want to create a queue.

2.Click Create and select Create Queue (screenshot below).

3.Enter a queue name, and, optionally, a description.

4.Configure the relevant settings. For details, see Queue Settings below.

5.Click Save.

Queue settings

The queue-related settings are listed below.

Setting | Description |

|---|---|

Queue name | This is a mandatory field that represents the name of a queue. The name may contain only letters, digits, single spaces, and the underscore (_), dash (-), and full stop (.) characters. It may not start or end with spaces.

|

Queue description | Optional description.

|

Run on (Advanced Edition) | Specifies how all job instances from this queue are to be run:

•Master or any worker: Job instances that are part of this queue will run on the master or on an available worker machine, depending on available server cores. •Master only: Job instances will run only on the master machine. •Any worker only: Job instances will run on any available worker but never on the master.

|

Minimum time between runs | A queue provides execution slots. The number of available slots is governed by the maximum parallel runs setting multiplied by the number of workers assigned according to the currently active rule. Each slot will execute job instances sequentially.

The Minimum time between runs setting keeps a slot marked as occupied for a short duration after a job instance has finished, so it will not pick up the next job instance right away. This reduces maximum throughput for this execution queue, but provides CPU time for other execution queues and other processes on the same machine.

|

Maximum parallel runs | This option defines the number of execution slots available in a queue. Each slot executes job instances sequentially, so the setting determines how many instances of the same job may be executed in parallel in the current queue.

Note, however, that the number of instances you allow to run in parallel will compete over available machine resources. Increasing this value could be acceptable for queues that process lightweight jobs that do not perform intensive I/O operations or need significant CPU time. The default value (1 instance) is suitable for queues that process resource-intensive jobs, which helps ensure that only one such heavyweight job instance is processed at a time.

This option does not affect the number of maximum parallel HTTP requests accepted by FlowForce Server (e.g., HTTP requests from clients that invoke jobs configured as Web services). For details, see Reconfiguring FlowForce Server pool threads.

|

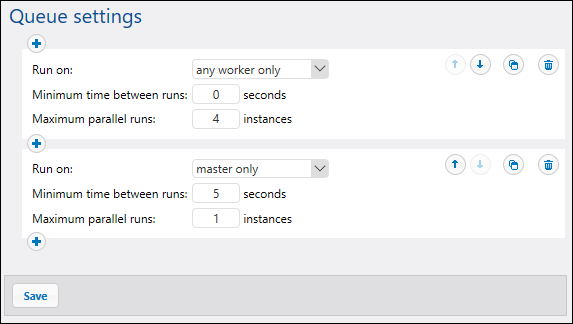

Multiple sets of queue settings (Advanced Edition)

You can define multiple sets of queue settings, each with different processing requirements, by clicking the  button. To change the priority of queue configurations, use the

button. To change the priority of queue configurations, use the  and

and  buttons. For example, you can define a rule for the case in which only the master is available and another rule for the case in which both the master and its workers are available. This enables you to create a fallback mechanism for the queue in case a cluster member becomes unavailable, for instance.

buttons. For example, you can define a rule for the case in which only the master is available and another rule for the case in which both the master and its workers are available. This enables you to create a fallback mechanism for the queue in case a cluster member becomes unavailable, for instance.

When processing queues, FlowForce Server constantly monitors the state of the cluster and its members. If you have defined multiple queue rules, FlowForce Server will evaluate them in the defined order, from top to bottom, and pick the first rule that has at least one cluster member assigned according to the Run On setting. For more information about operation in master and worker modes, see Cluster.

Example

As an example, let's consider a setup where the cluster includes one master and four worker machines. The queue settings are defined as shown below:

With the configuration illustrated above, FlowForce would process the queue as follows:

•If all workers are available, the top rule will apply. Namely, up to 16 job instances can run simultaneously (4 instances for each worker). The minimum time between runs is 0 seconds.

•If only three workers are available, the top rule will still apply. Namely, up to 12 job instances can run simultaneously, and the minimum time between runs is 0 seconds.

•If no workers are available, the second rule will apply: Only 1 instance can run at a given time, and the minimum time between runs is 5 seconds.

This kind of configuration makes execution still possible in the absence of workers. Notice that the master only rule is stricter (1 instance only, and 5 seconds delay between runs) so as not to take away too much processing power from the master machine when all the workers fail.

Assign jobs to queues

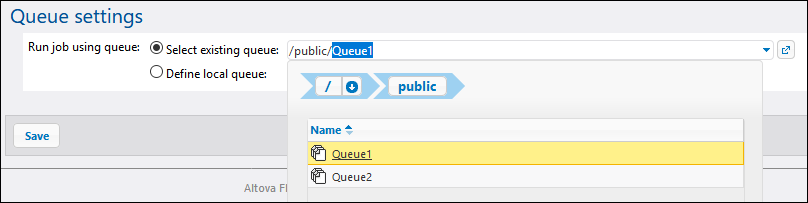

After configuring a queue, you will need to assign a job to it on the job configuration page. In order to do this, take the steps below:

1.Open the configuration of the job that you wish to assign to the queue.

2.Navigate to the queue settings at the bottom of the page.

3.Select the Select existing queue option and provide the path to the desired queue object (screenshot below).

Priority in a queue (Advanced Edition)

In FlowForce Server Advanced Edition, you can assign priority to jobs in a queue. Priority is estimated based on all the jobs assigned to the queue. Priority can be low, below normal, normal, above normal, or high. The default priority is normal. You can set priority for any trigger type. If your job has multiple triggers configured, you can select different priority values for them, if necessary.

Global queues

Setting trigger priority is particularly relevant to global queues, because you can decide which jobs are more important in a queue and should be fired first. In most cases, a job will have only one trigger. A job whose trigger has a higher priority and whose trigger conditions have been met will fire first.

Assuming there are several jobs in a global queue, and each job has several triggers of different priority, FlowForce will first check the triggers of higher priority. If the triggers' conditions are not fulfilled, FlowForce Server will then go on to check the triggers of lower priority. For a job with several triggers, it would make more sense to set the same priority value for all of them (e.g., high priority if the job is more important than others in the queue).

Local queues

A local queue processes instances of one and the same job. If there is only one trigger configured, the priority value will be ignored. If there are several triggers of different priority, the triggers will compete with each other. For example, a job has a timer and a file-system trigger. The timer has a lower priority, whereas the file-system trigger has a higher priority. If the timer's condition has been fulfilled, and if there are no files to process, the timer will start the job earlier than the second trigger. However, if there are multiple files to process, the timer will wait, and the file-system trigger will be given priority.