Processing the Groupon API – Epilogue

Rare edge cases can derail loosely coupled data mapping applications. This is especially true when you are consuming large datasets available over the Internet and have little or no influence over the source data. In this article we describe a debugging technique that lets developers working on data mapping and transformation projects quickly identify and accommodate unexpected data in a stream from a remote source. The Problem Last summer we wrote a series of blog posts describing how to work with the Groupon API to retrieve a subset of offers in all Groupon cities and format the list for a web browser or mobile device.  We concluded with a command line to run a MapForce data mapping that calls the Groupon API over 150 times — once for each Groupon city, then filters the data to extract deals sold on the Internet instead of a physical location, and formats the results in HTML using StyleVision. Every morning we run the command line in a batch file that saves the HTML output on a local server so our colleagues can check it out with any Web browser to find interesting offers from all over the country. The mapping ran fine for more than two months until one day it failed with this error message: “Source-value “” of type dateTime could not be converted into target-type dateTime.” The specific explanation is that somewhere in the mapping where we expected a dateTime, we received an empty value. On a more abstract level, the error suggests a potential defect in the logic of our mapping strategy. Every time we call the Groupon API we receive a well-formed XML data stream enclosed in a <response> element, but the API specs do not include an XML Schema defining the data that may be returned. When we developed our mapping we needed to analyze the raw data and select the output we wanted, so our first step was to call the API to capture all the Groupon deals for one large metro area. We assumed we would get a large enough data sample to include every possible option in the API response. After our mapping ran successfully for two months, the API finally delivered a rare edge case that did not fit the pattern we expected. Debugging Tools MapForce provides debugging help. We can run our data mapping using the MapForce built in execution engine to see more details in the Messages window.

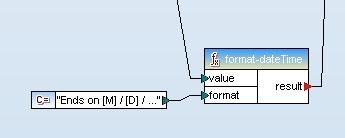

We concluded with a command line to run a MapForce data mapping that calls the Groupon API over 150 times — once for each Groupon city, then filters the data to extract deals sold on the Internet instead of a physical location, and formats the results in HTML using StyleVision. Every morning we run the command line in a batch file that saves the HTML output on a local server so our colleagues can check it out with any Web browser to find interesting offers from all over the country. The mapping ran fine for more than two months until one day it failed with this error message: “Source-value “” of type dateTime could not be converted into target-type dateTime.” The specific explanation is that somewhere in the mapping where we expected a dateTime, we received an empty value. On a more abstract level, the error suggests a potential defect in the logic of our mapping strategy. Every time we call the Groupon API we receive a well-formed XML data stream enclosed in a <response> element, but the API specs do not include an XML Schema defining the data that may be returned. When we developed our mapping we needed to analyze the raw data and select the output we wanted, so our first step was to call the API to capture all the Groupon deals for one large metro area. We assumed we would get a large enough data sample to include every possible option in the API response. After our mapping ran successfully for two months, the API finally delivered a rare edge case that did not fit the pattern we expected. Debugging Tools MapForce provides debugging help. We can run our data mapping using the MapForce built in execution engine to see more details in the Messages window.  The lines labeled Related location are hyperlinked back to components in the mapping where the error occurred. Clicking on the result error takes us to a format-dateTime function.

The lines labeled Related location are hyperlinked back to components in the mapping where the error occurred. Clicking on the result error takes us to a format-dateTime function.  We can either click the “” error or trace the value connector to identify the input element to the format-dateTime function. Either way, we locate the element that triggered the error.

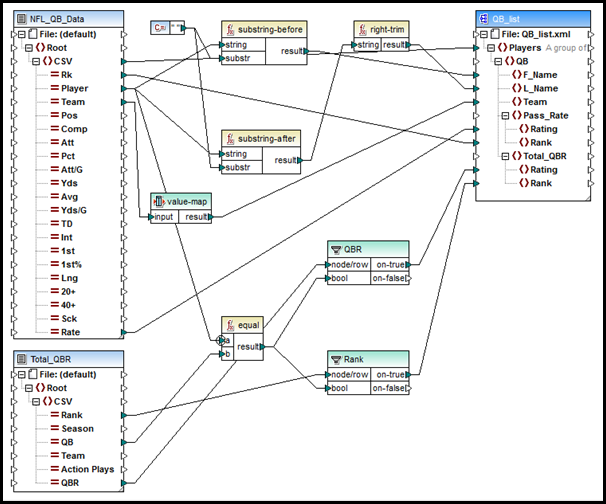

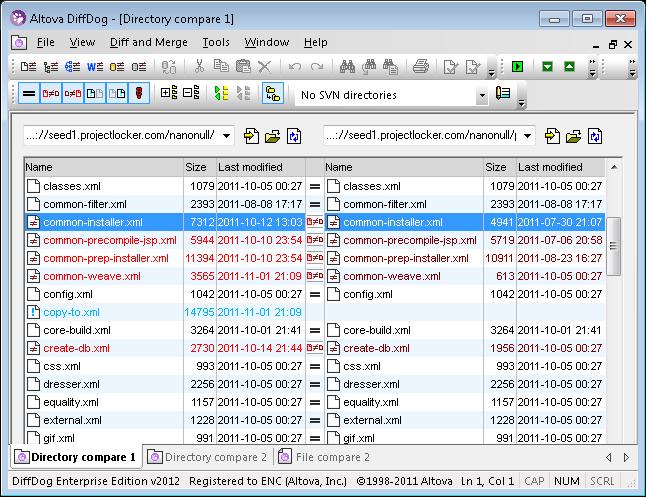

We can either click the “” error or trace the value connector to identify the input element to the format-dateTime function. Either way, we locate the element that triggered the error.  The suspect element resides in the input component that captures all the data returned by our calls to the Groupon API before any filtering or conversion takes place. When we designed the mapping, the endAt element in our sample data always reported the ending date and time for each Groupon offer, but for some reason we must have received an empty value in this field. If the error had occurred by running a local input file we could simply examine the file contents, but in this case the data came from multiple URLs, and is only held temporarily until it is mapped to the output component. Fortunately, we can apply a trick to easily modify the mapping and preserve all data received from the Groupon API. We simply copy the input component and paste a duplicate into the mapping. We can connect the response element from the original to the duplicate, which simultaneously maps all the child elements between the components.

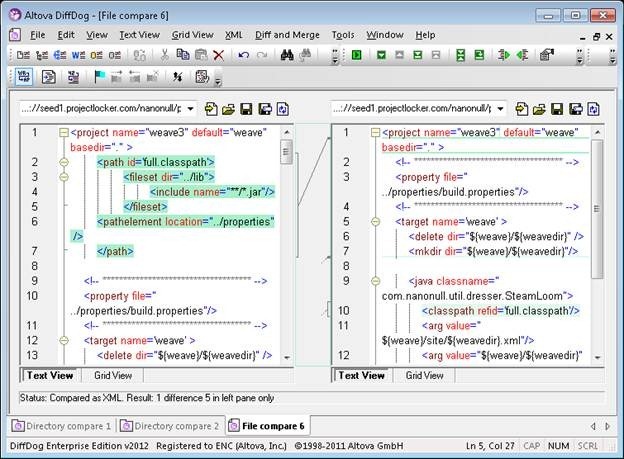

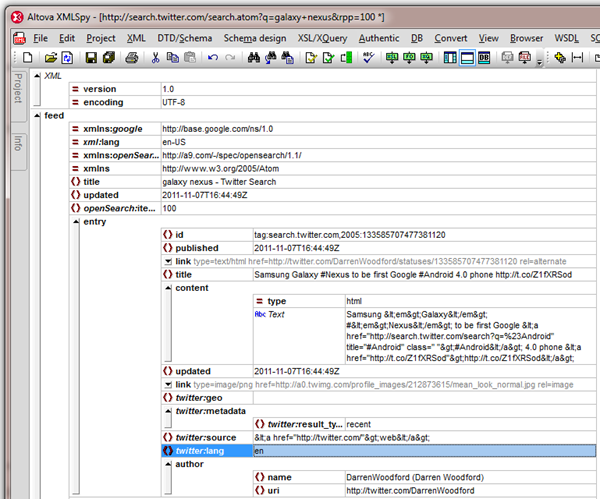

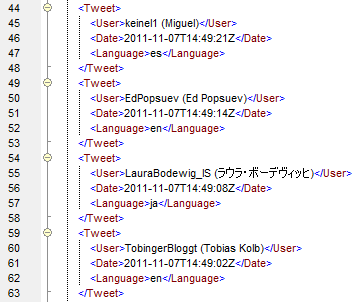

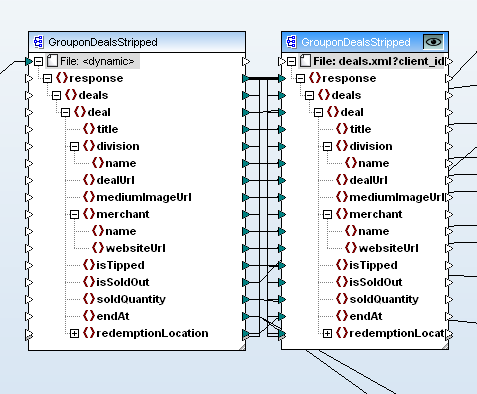

The suspect element resides in the input component that captures all the data returned by our calls to the Groupon API before any filtering or conversion takes place. When we designed the mapping, the endAt element in our sample data always reported the ending date and time for each Groupon offer, but for some reason we must have received an empty value in this field. If the error had occurred by running a local input file we could simply examine the file contents, but in this case the data came from multiple URLs, and is only held temporarily until it is mapped to the output component. Fortunately, we can apply a trick to easily modify the mapping and preserve all data received from the Groupon API. We simply copy the input component and paste a duplicate into the mapping. We can connect the response element from the original to the duplicate, which simultaneously maps all the child elements between the components.  Our original input component is now connected to two output components. We can select which output component will be generated by the MapForce built-in execution engine by clicking the eye icon at the top right corner of any output component. The new output component simply saves a copy of everything in the input component. When we examine the raw data using XMLSpy, sure enough we find an empty element where we expected a date and time:

Our original input component is now connected to two output components. We can select which output component will be generated by the MapForce built-in execution engine by clicking the eye icon at the top right corner of any output component. The new output component simply saves a copy of everything in the input component. When we examine the raw data using XMLSpy, sure enough we find an empty element where we expected a date and time: ![]() The Solution Now that we know an offer might have no specific end time, we can plan for that possibility in the mapping. In the revised treatment of the endAt element, we do an if-test before the original format-dateTime function and provide an alternate output when the endAt element is empty.

The Solution Now that we know an offer might have no specific end time, we can plan for that possibility in the mapping. In the revised treatment of the endAt element, we do an if-test before the original format-dateTime function and provide an alternate output when the endAt element is empty.  We had to work fast because all Groupon data is time sensitive. The edge case would eventually expire and disappear from the data stream. This experience showed us how important it is to have powerful debugging tools and to use them creatively, even after you think a data mapping project is running successfully! Altova MapForce is available in a free trial – the next edge case you solve could be your own. Editor’s Note: Our original series on mapping data from the Groupon API ran in three parts you can see by clicking the links here: Part 1 of Processing the Groupon API with Altova MapForce describes how to create dynamic input by collecting data from multiple URLs. Processing the Groupon API with MapForce – Part 2 describes how we filtered data from the API and defined the output to extract only the most interesting details. Processing the Groupon API – Part 3 describes formatting the output as a single HTML document optimized for desktop and mobile devices, and reviews ways to automate repeat execution.

We had to work fast because all Groupon data is time sensitive. The edge case would eventually expire and disappear from the data stream. This experience showed us how important it is to have powerful debugging tools and to use them creatively, even after you think a data mapping project is running successfully! Altova MapForce is available in a free trial – the next edge case you solve could be your own. Editor’s Note: Our original series on mapping data from the Groupon API ran in three parts you can see by clicking the links here: Part 1 of Processing the Groupon API with Altova MapForce describes how to create dynamic input by collecting data from multiple URLs. Processing the Groupon API with MapForce – Part 2 describes how we filtered data from the API and defined the output to extract only the most interesting details. Processing the Groupon API – Part 3 describes formatting the output as a single HTML document optimized for desktop and mobile devices, and reviews ways to automate repeat execution.